Prerequisites

We assume anyone who wants to understand Kubernetes should have an understating of how the Docker works, how the Docker images are created, and how they work as a standalone unit. To reach to an advanced configuration in Kubernetes one should understand basic networking and how the protocol communication works.

How this KS8 has been defined.

That is nothing but the letter starting from the u in kubernetes till e in kubernetes. we have total of 8 characters.

Kubernetes is an extensible, portable, and open-source platform designed by Google in 2014. It is mainly used to automate the deployment, scaling, and operations of the container-based applications across the cluster of nodes

Features

Pod

It is the smallest and simplest basic unit of the Kubernetes application. This object indicates the processes which are running in the cluster. We can also say that it is a docker Container.

It is a deployment unit in Kubernetes with a single Internet protocol address.

ReplicaSet

A ReplicaSet in the Kubernetes is used to identify the particular number of pod replicas are running at a given time. It replaces the replication controller because it is more powerful and allows a user to use the "set-based" label selector.

Persistent Storage: Kubernetes provides an essential feature called 'persistent storage' for storing the data, which cannot be lost after the pod is killed or rescheduled. Kubernetes supports various storage systems for storing the data, such as Google Compute Engine's Persistent Disks (GCE PD) or Amazon Elastic Block Storage (EBS). It also provides the distributed file systems: NFS or GFS.

Automatic Bin Packing: Kubernetes helps the user to declare the maximum and minimum resources of computers for their containers.

Self-Healing: This feature plays an important role in the concept of Kubernetes. Those containers which are failed during the execution process, Kubernetes restarts them automatically. And, those containers which do not reply to the user-defined health check, it stops them from working automatically.

Automated rollouts and rollbacks: Using the rollouts, Kubernetes distributes the changes and updates to an application or its configuration. If any problem occurs in the system, then this technique rollbacks those changes for you immediately.

Service Discovery and load balancing: Kubernetes assigns the IP addresses and a Name of DNS for a set of containers, and also balances the load across them.

Kubernetes Architecture

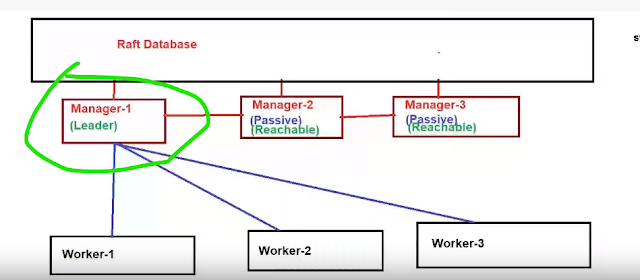

The architecture of Kubernetes actually follows the client-server architecture. It consists of the following two main components:

- Master Node (Control Plane)

- worker node

- API Server

- Scheduler

- Controller Manager

- ETCD

API Server

The Kubernetes API server receives the REST commands which are sent by the user. After receiving, it validates the REST requests, process, and then executes them. After the execution of REST commands, the resulting state of a cluster is saved in 'etcd' as a distributed key-value store.

Scheduler

The scheduler in a master node schedules the tasks to the worker nodes.

In other words, it is a process that is responsible for assigning pods to the available worker nodes

Controller Manager

The Controller manager is also known as a controller. It is a daemon that executes in the non-terminating control loops. The controllers in a master node perform a task and manage the state of the cluster. In the Kubernetes, the controller manager executes the various types of controllers for handling the nodes, endpoints, etc.

ETCD

It is an open-source, simple, distributed key-value storage which is used to store the cluster data.

Worker Node

The Worker node in a Kubernetes is also known as minions. A worker node is a physical machine that executes the applications using pods.

Kubelet

This component is an agent service that executes on each worker node in a cluster. It ensures that the pods and their containers are running smoothly. Every kubelet in each worker node communicates with the master node. It also starts, stops, and maintains the containers which are organized into pods directly by the master node.

Pods

A pod is a combination of one or more containers which logically execute together on nodes. One worker node can easily execute multiple pods.

Kube-proxy

It is a proxy service of Kubernetes, which is executed simply on each worker node in the cluster. The main aim of this component is request forwarding. Each node interacts with the Kubernetes services through Kube-proxy.

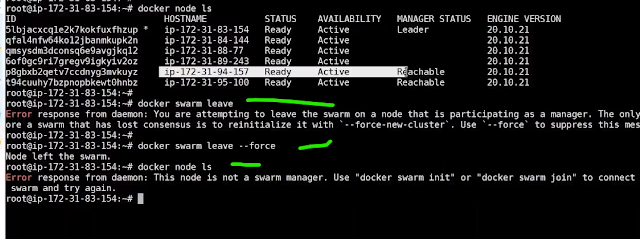

Installation